Cheap Personal Backup using AWS Glacier

Posted by Klaus Eisentraut in scripts

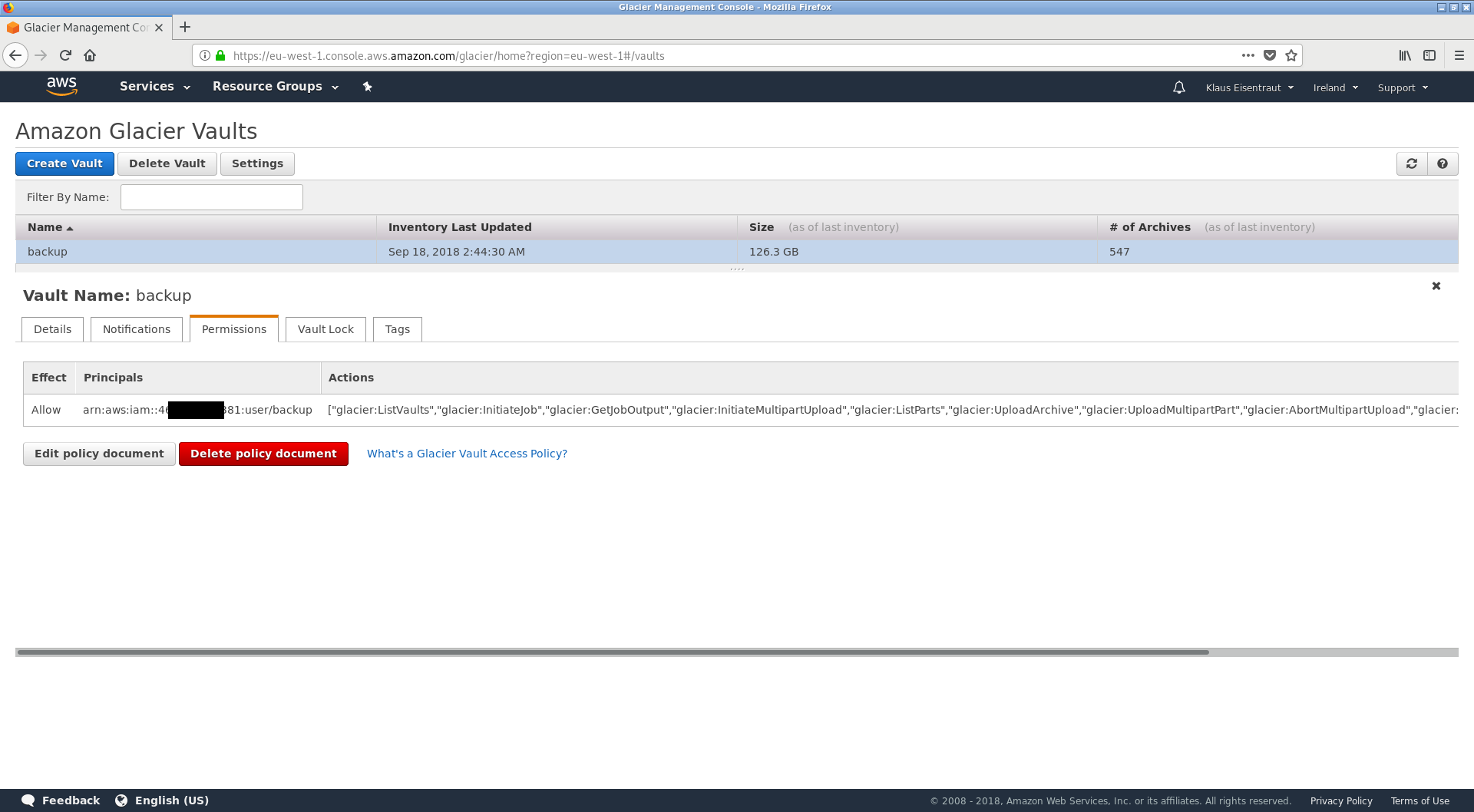

Over two years ago, I wrote an article about how I backup my personal data with AWS S3. Since then, my data grew to around 126 GB and I had the urge to switch to the even better suited and cheaper AWS Glacier. With Glacier, I'm paying only around 50 cent per month to store my 126 GB and around 12 Euro in case I need to restore everything. Uploading any data is basically free, as I only upload large (10 MB to 4 GB) archives.

Assumptions and Simplifications

The assumptions from 2 years back are still valid for me. If they don't apply for you, too, my setup will probably not fit your needs.

- To make things easier, I only backup immutable archives, for instance, a collection of photos of a given event will never change later on. If a single file inside a folder changes (which never happened so far), I'm willing to delete and re-upload the whole archive again.

- No data is removed ever, only new archives are added.

- Everything should be stored on the HDD in my laptop, on an external HDD and in the cloud, i.e. I will be following the 3-2-1 rule.

- Everything should be encrypted, Amazon or the NSA should not be able to read any data of me.

Preparation

Installing the AWS Command Line Interface (aws-cli) was very easy as it was in the Arch Linux repository and done by a simple pacman -S aws-cli.

After creating a AWS Account, I created an AWS IAM user named backup.

Then, I've added the credentials for the IAM user to my laptop's local configuration.

It looks like this:

$ cat ~/.aws/credentials

[default]

aws_access_key_id = A89ABXXZIVASSDDQ

aws_secret_access_key = +jtJLidi3ld9vlsL9sl9dls9zoif/

Then, I set the AWS region eu-west-1 (or Ireland) as the default region. I chose eu-west-1 out of the many because it is the only location in the European Union which has the cheapest storage price of 0.4ct/GB/month.

$ cat ~/.aws/config

region = eu-west-1

output = json

Afterwards, some configuring in the AWS console was necessary.

In detail, a Glacier vault named backup was created and the previously created IAM user backup was granted read-/upload-permissions.

This was done by adding the following policy to the vault's access policy.

It grants only upload- and read-privileges, but the IAM user cannot delete any data.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::46XXXXXXXX91:user/backup"

},

"Action": [

"glacier:ListVaults",

"glacier:InitiateJob",

"glacier:GetJobOutput",

"glacier:InitiateMultipartUpload",

"glacier:ListParts",

"glacier:UploadArchive",

"glacier:UploadMultipartPart",

"glacier:AbortMultipartUpload",

"glacier:CompleteMultipartUpload"

],

"Resource": "arn:aws:glacier:eu-west-1:46XXXXXXXX91:vaults/backup"

}

]

}

Here is a screenshot of all the glacier settings:

Encrypting a folder and storing it centrally

For the actual backup, I decided to do it the following way:

- Let's assume, I got a new folder which I want to backup, for instance, a friend sent me some pictures from his birthday party and they are now stored in a local folder

DCIMsomewhere on my laptop. - First, I run a script which takes the folder which should be backuped and the name for the backup as arguments. The name of the backup has some restrictions and must have a format like

2018-09-28_Backup_demo. - The script prompts me for an encryption passphrase which is identical for all archives. Symmetric encryption has the great advantage that I can't lose my private key file. It is secure enough, too, if only the passphrase is strong enough (I'm using much more than 20 characters!).

- With a passphrase as long as this, the script checks that I didn't misspell my passphrase. After all, an encrypted backup which I can't decrypt properly is useless.

- If everything is fine, the archive

2018-09-28_Backup_demo.tar.gpgis created which contains the encrypted data. This file is stored with all other archives on a dedicated folder/scratch/backup/awsbackup/on the local harddisk in my laptop.

You can easily adapt the script, just modify all lines which are marked with "TODO".

#!/bin/bash

# check correct usage!

if [ ! "$#" -eq "2" ]; then

echo "Usage: $0 /path/to/folder/which/is/backuped 201X-XX-XX_name_aws_backup"

exit 1

else

# check if folder exists

if [ ! -d "$1" ]; then

echo "Folder '$1' does not exist!"

exit 1

fi

# check if name has format YYYY-MM-DD_alphanumeric_description

if [[ ! "$2" =~ [12][0-9X][0-9X]{2}-[01X][0-9X]-[0-3X][0-9X]_[a-zA-Z_\-]+ ]]; then

echo "Name '$2' is invalid!"

exit 1

fi

# read and check passphrase

echo -n 'enter passphrase: '

read MYPP

# TODO: adjust your (shortened) passphrase hash here, this acts as a safeguard

# (a backup encrypted with the wrong key is unusable and dangerous)

if [[ ! $(echo -n "$MYPP" | sha512sum) =~ ^be8542b.*$ ]]; then

echo "wrong password"

exit 1

fi

# ensure that folder exists and remove previous files

mkdir -p "/tmp/awsbackup/"

# encrypt everything, note that GnuPG does compression already!

echo "Creating encrypted archive \"$2.tar.gpg\" ..."

ln -s "$(pwd)"/"$1" /tmp/awsbackup/"$2"

cd /tmp/awsbackup/ >/dev/null

# TODO: change your path here from /scratch/backup/awsbackup/ to whatever suits you

tar cvh "$2" | gpg --s2k-mode=3 --s2k-cipher-algo=AES256 --s2k-digest-algo=SHA512 --s2k-count=65011712 --symmetric --cipher-algo=AES256 --digest-algo=SHA512 --compress-algo=zlib --batch --passphrase=$MYPP > /scratch/backup/awsbackup/"$2".tar.gpg

cd - >/dev/null

# we don't need it anymore, so we overwrite our passphrase

MYPP=0123456789012345678901234567890123456789

echo "Encryption done! Removing symlink ..."

unlink /tmp/awsbackup/"$2"

fi

All it does is to do some validity checks, read in a passphrase, check the passphrase for typing errors by comparing it to the first few bytes of its SHA512 hash and afterwards copying the encrypted archive.

The script in action looks like the following (backup-ing the local folder DCIM)

$ ./awsbackup.sh DCIM/ 2018-09-28_Backup_demo

enter passphrase: not-my-passphrase

wrong password

or - if you use the correct password -

$ ./awsbackup.sh DCIM/ 2018-09-28_Backup_demo

enter passphrase: my-supa-dupa-s3cure-p4ssphr4se!

Creating encrypted archive "2018-09-28_Backup_demo.tar.gpg" ...

2018-09-28_Backup_demo/

2018-09-28_Backup_demo/IMG0002.JPG

2018-09-28_Backup_demo/IMG0001.JPG

2018-09-28_Backup_demo/IMG0003.JPG

2018-09-28_Backup_demo/IMG0004.JPG

Encryption done! Removing symlink ...

Uploading to AWS Glacier

By now, I only encrypted a folder of images and put it in a well defined location on my main harddisk. So I didn't do any backup yet.

For uploading files to Glacier I use another script.

As there is no simple ls command for AWS Glacier, it needs to store some JSON metadata in a second folder /scratch/backup/awsbackup.glacier.

If my laptop harddisk crashes, and I lose the JSON meta-data, I can still download everything, but I'll have to wait at least an additional 24 hours to download the latest inventory out of AWS Glacier.

The script /scratch/backup/upload_glacier.sh looks like the following.

Again, you can adapt it to your situation by modifying all lines with a TODO comment.

#!/usr/bin/env bash

#

# backup script which uploads the immutable contents of one folder to AWS glacier

# TODO: does not work for files > 4GB ...

#

# written by Klaus Eisentraut in 2017, Licence WTFPL

# TODO: adjust your folders accordingly

AWSPATH="/scratch/backup/awsbackup"

GLACIERPATH="/scratch/backup/awsbackup.glacier"

VAULT="backup"

# read and check passphrase

echo -n 'enter passphrase: '

read MYPP

# TODO: adjust your (shortened) passphrase hash here, this acts as a safeguard

# (a backup encrypted with the wrong key is unusable and dangerous)

if [[ ! $(echo -n "$MYPP" | sha512sum) =~ ^be5824b.*$ ]]; then

echo "wrong password"

exit 1

fi

cd "$AWSPATH"

ls *.tar.gpg | sort -R | while read -r i; do

if [ -s "$GLACIERPATH"/"$i".json ]; then # check for non empty file

if grep -q '"archiveId":' "$GLACIERPATH"/"$i".json; then # check if file contains "archiveId"

# already successfully uploaded

echo "$i was already uploaded to glacier."

else

# strange file

echo "WARNING: $i seems corrupt! Please fix manually, see file below:"

echo "----------------------------------------"

cat "$GLACIERPATH"/"$i".json

echo "----------------------------------------"

fi

else

# encrypt filename deterministically (nosalt)

e=$(echo -n "$i" | openssl enc -e -aes-256-cbc -pbkdf2 -iter 100000 -nosalt -k "$MYPP" -a | tr -d '\n' )

echo "$i"" -> ""$e"

aws glacier upload-archive --vault-name "$VAULT" --account-id - --body "$i" --archive-description "$e" 2>&1 | tee "$GLACIERPATH"/"$i".json

fi

done

MYPP=01234567890123456789012345678901234567890123456789

The simple openssl enc command encrypts the filename with the given passphrase, but does it in a deterministic way which is not perfectly cryptographic secure.

Therefore, if you have two archives with filenames identical for the first 16 bytes, those first 16 bytes will be encrypted identically and the fact that they start identically is obvious to Amazon.

However, the plaintext of the filename should still be safe against decryption.

External HDD Backup

In irregular intervals, I simply copy two involved folders /scratch/backup/awsbackup and /scratch/backup/awsbackup.glacier to my external HDD.

Verifying the local backups

As Glacier calculates a hash of the backuped files, I have an additional useful script /scratch/backup/verify.sh.

This script calculates the checksums of all local *.tar.gpg archives and checks if a file with their checksum has been backuped correctly.

If one of the local *.tar.gpg archives would undergo bit-rot, the checksum would not match any more and the error would be detected.

Then I would restore the original file from either the other local copy (external HDD or laptop HDD) or from AWS Glacier.

In the last two years, it didn't happen anyway.

Please note that this script uses my simple commandline tool to calculate the special tree hash used by AWS Glacier.

#!/bin/bash

# TODO: adjust the paths accordingly

ls /scratch/backup/awsbackup/*.tar.gpg | sort | parallel -j2 awstreehash ::: | while read -r h; do

hash=${h:0:64}

grep -Rl $hash /scratch/backup/awsbackup.glacier/ >/dev/null || echo "error in $h"

done

Testing the restore process

Finally, I wrote down the password for my AWS Account as well as the encryption password and stored it at two different locations. Furthermore, I told a relative about it and he was able to restore the backup using the AWS Console and my notes. This should be sufficient that I'm pretty confident that I'll never loose any personal data.